Junjie (Jorji) Chen

My Chinese name is 陈俊杰 // 陳俊傑 [Sounds like: Ch-urn June-Jee-eh].

You can call me Jorji.

I was born in November 2002 in Lu’an, Anhui, China. I am currently in the final year of my Master’s degree at Anhui Polytechnic University (AHPU), supervised by Prof. Subin Huang.

I am a Research Intern at the Institute of Artificial Intelligence, Hefei Comprehensive National Science Center, supervised by Prof. Meng Wang, and I plan to apply for PhD studies in Fall 2026. I am also working with Linfeng Zhang and his research group, where I am learning and exploring research problems related to Efficient AI.

💡 I am broadly interested in understanding how AI systems can better model human psychological and emotional states, and how such understanding can be incorporated into practical and efficient interactive systems.

🎯 Research Focus

I am primarily interested in the following research question:

How can we build psychologically grounded, fine-grained, multimodal, efficient, and full-duplex conversational AI systems that better understand and respond to human emotions?

In particular, I am learning and exploring:

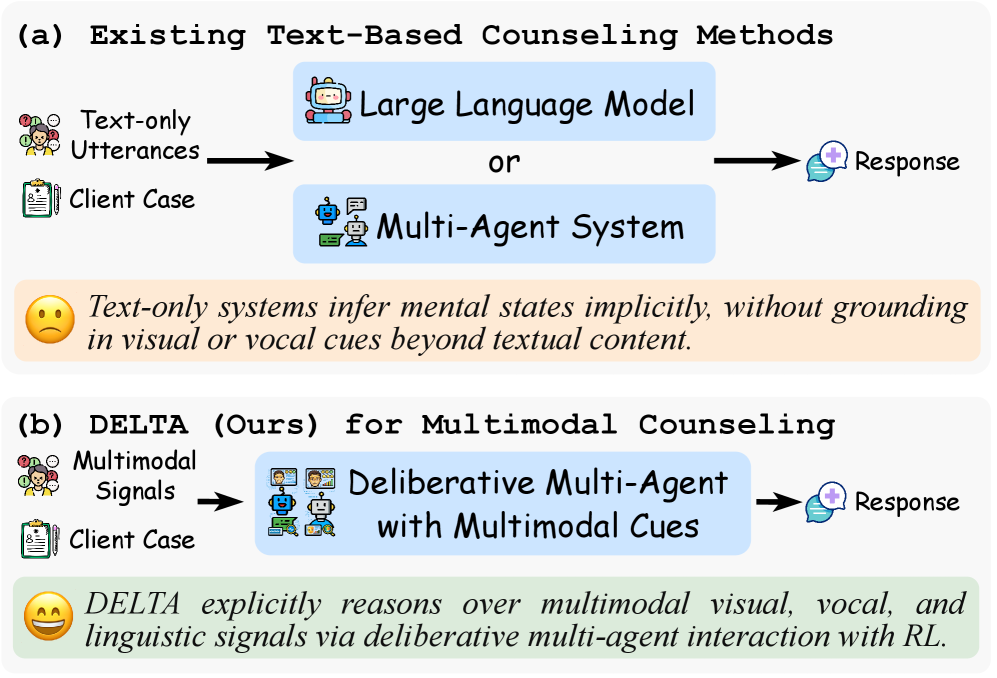

- 🧠 Psychology-informed modeling of emotion and cognition in dialogue systems.

- 🎭 Fine-grained emotion perception, including subtle, dynamic, and context-dependent affect.

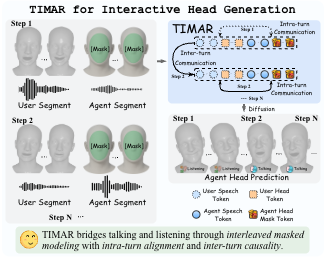

- 🔊🖼️ Multimodal interaction, combining language with visual, acoustic, and behavioral cues.

- ⚡ Efficiency-aware methods for building deployable and scalable dialogue models.

For a deeper dive into my long-term roadmap and methodology, please visit my Research Vision.

🔬 Research Interests

The following areas reflect my current interests and learning directions, which support the above research focus:

-

🧠 AI4Psychology

Computational approaches to modeling psychological processes. -

⚡ Efficient AI

Model compression, acceleration, and efficiency-oriented learning. -

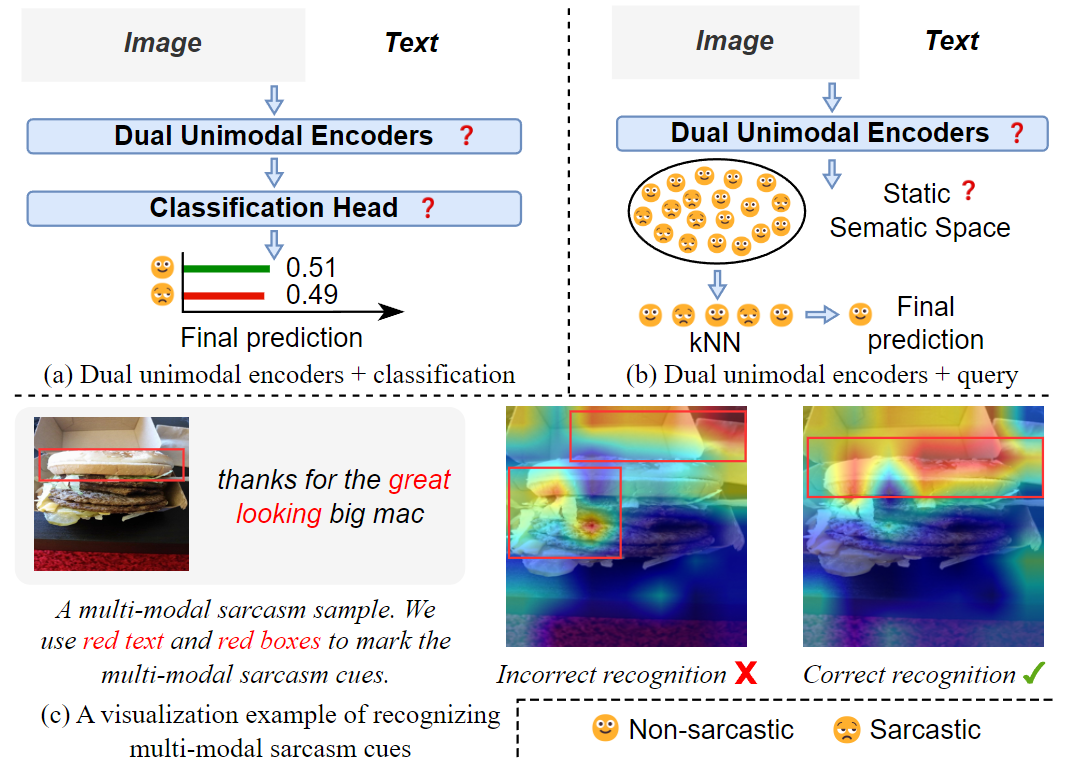

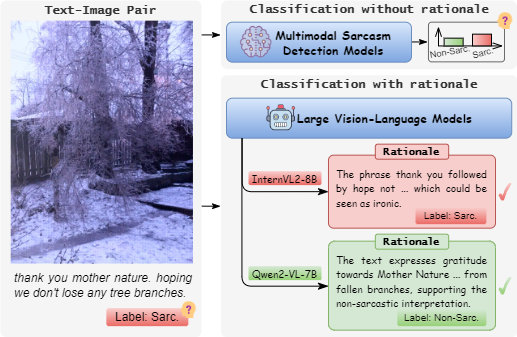

🎭 Multimodal AI

Representation learning and fusion across multiple modalities. -

🖌️ Generative AI

Controlled and interpretable generation for dialogue and simulation. -

🧭 Spatial Intelligence

Embodied and environment-aware reasoning related to interaction and behavior.

These directions are not independent goals, but are explored as part of a broader effort to understand and build psychologically informed conversational AI.

💬 Let’s Collaborate!

I am always happy to learn from and collaborate with researchers interested in:

- Emotion and affect modeling grounded in psychology

- Multimodal dialogue systems

- Efficient and practical conversational AI

If our interests overlap, I would be glad to connect and exchange ideas.

| Nov 06, 2025 | 🥰 Our paper “InterCLIP-MEP: Interactive CLIP and Memory-Enhanced Predictor for Multi-modal Sarcasm Detection” has been accepted for publication in ACM Transactions on Multimedia Computing, Communications and Applications! |

|---|---|

| Sep 05, 2025 | 🎉 Our paper “Seeing Sarcasm Through Different Eyes: Analyzing Multimodal Sarcasm Perception in Large Vision-Language Models” has been accepted for publication in IEEE Transactions on Computational Social Systems! |

| Jul 03, 2025 | ✨ I joined the Institute of Artificial Intelligence, Hefei Comprehensive National Science Center as a research intern to study conversational AI systems grounded in psychological theories with an emphasis on efficiency. |

| Mar 24, 2025 | 🥳 I joined TeleAI as a research intern to study two-stage text-to-video technology. |

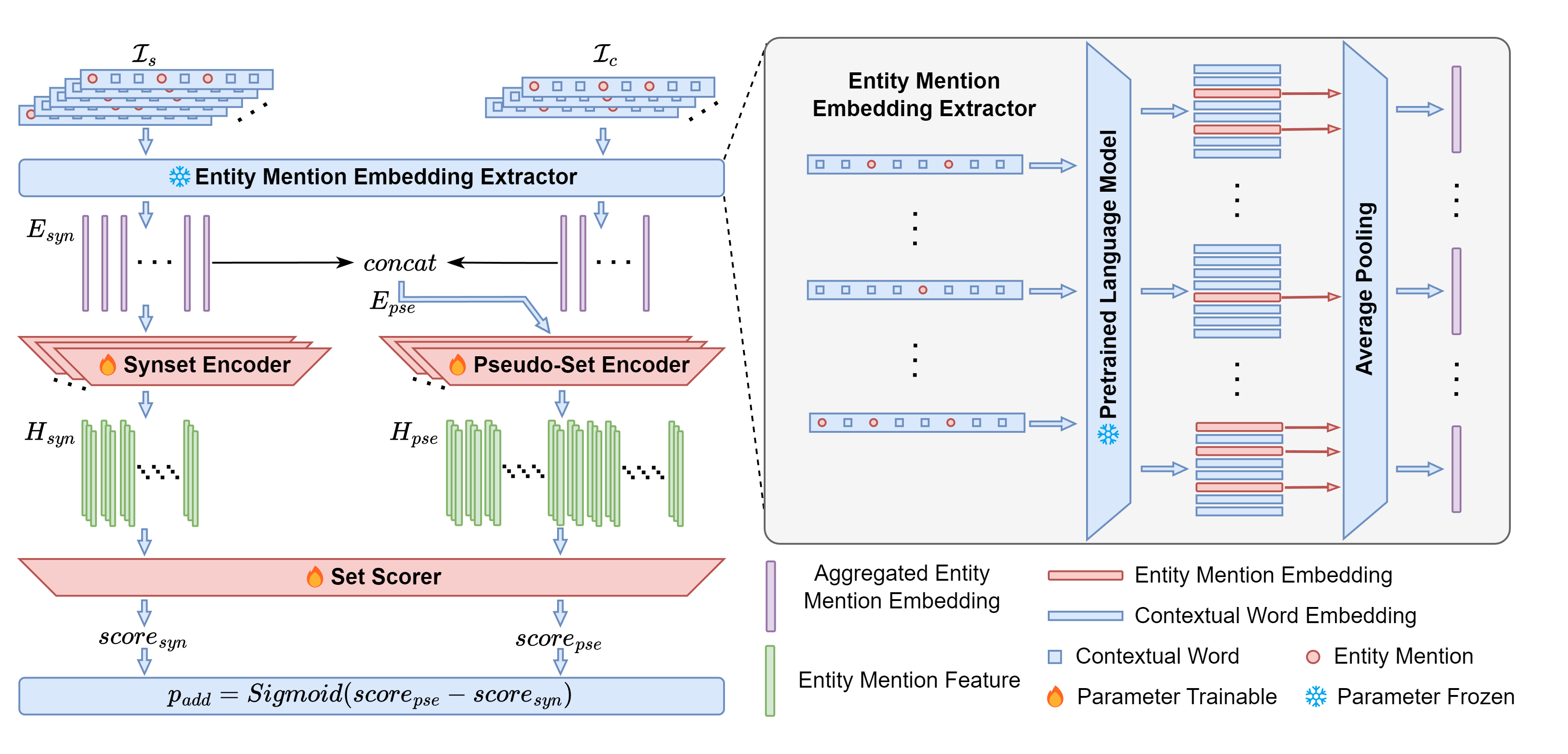

| Feb 28, 2025 | 🥳 Our paper “DE-ESD: Dual Encoder-based Entity Synonym Discovery using Pre-trained Contextual Embeddings” has been accepted for publication in Expert Systems With Applications! |

Research Intern

Pursuing ongoing research on emotion-aware conversational AI, emphasizing psychological grounding, multimodal affect understanding, and efficient full-duplex dialogue systems.

Research Intern

Focused on text-to-3D human motion techniques to support controllable video generation.

Research Assistant

Focused on Efficient AI, with emphasis on Model Merging, KV Cache Compression, and Diffusion Cache techniques.

Remote Intern

Engaged in weekly remote group meetings focused on learning and academic discussion.

Egineering Intern

High-precision map (HDMap) data format and algorithm design; development of a visualization platform; and development of the mapping module for autonomous driving software.